Emotion Recognition - From Scratch! 😐😀😞😧😱🤢🤬🤨(GitHub)

*** it may take a few seconds to start predictions depending on your hardware - please only run on desktop/ laptop, not mobile. Lower latency solutions in progress ***

-

methodology: multi-task transfer learning approach to predict valence [-1,1], arousal [-1, 1], and 8 emotion labels [neutral, happy, sad, surprise, fear, disgust, anger, contempt]

-

valence and arousal are proxies for determining overall emotional state. goal of this methodology was to coerce/ influence the neural network to learn emotion through valence and arousal as opposed to solely learning emotion directly. by creating a interdependency between valence, arousal, and emotion, we can explore how overall performance on these related tasks changes due to collinearity.

-

note: valence + arousal used for multi-task knowledge distillation purposes and not currently implemented in current predictions.

-

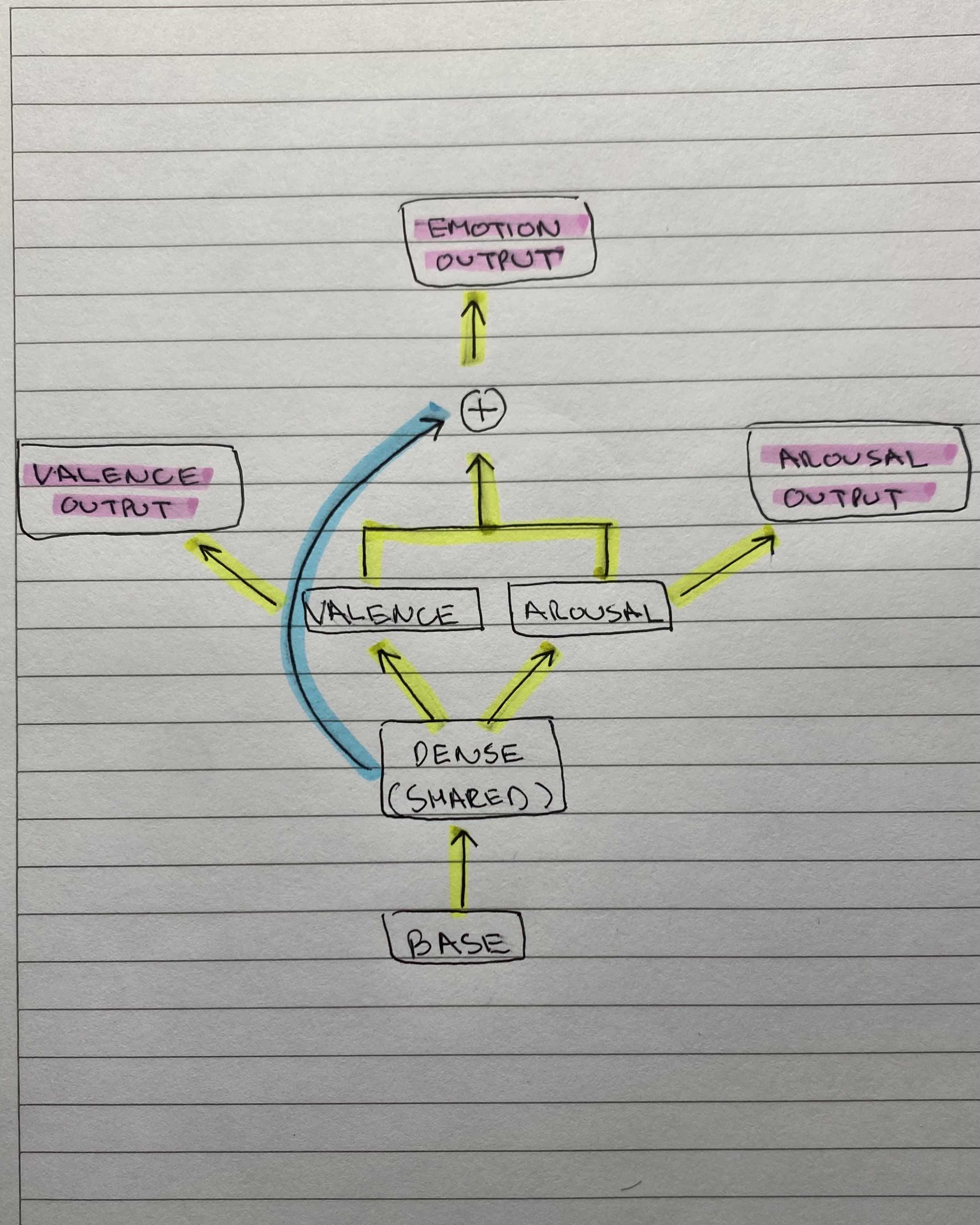

neural network architecture: NasNetMobile base pretrained on imagenet followed by a shared fully-connected layer (dense0). following dense0, valence and arousal heads each have their own fully-connected layers with relu followed by a linear output layer. expression head has a skip connection combining the aforementioned dense layers followed by a softmax output for classification. see below for more information:

-

valence head: base -> dense0 -> dense1 -> linear output

-

arousal head: base -> dense0 -> dense2 -> linear output

-

expression head: base -> [dense0 + concat(dense1, dense2)] -> softmax layer

-

overall architecture:

-

data + training: AffectNet8 dataset was used for training (~290,000 Images) using an image generator for creating random augmentations (crop, shift, etc..). model was trained using 85% of training data. early stopping was used with a 15% validation set to prevent overfitting. first trained for 5 (+ 1 patience) epochs with a frozen base and then finetuned for 16 (+ 3 patience) epochs. model also used batch normalization, dropout, l2 regularization as well.

-

deployment: keras model (~60mb) has been converted to a tensorflow js graph model (~10mb) with float16 quantization. website is in vanilla javascript and html. everything is running client-side and in browser so no data is collected (no backend server being used!). opencv was used for bounding boxes (haar cascade) to detect and crop in on faces.